eBayŽ Land Auctions - Who, Where and How Much? | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Author Steven Raddigan American River College, Geography 350: Data Acquisition in GIS; Spring 2004 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Abstract This project focuses on the process of utilizing custom software to extract specific data elements from thousands of similar web pages over an extended period of time. The project involved database design, software application development and data integration with ArcMap8.3. The eBayŽ internet website was chosen because of the dynamic nature of the site, the volume of data available for collection and the spatial orientation. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Introduction Founded in September 1995, eBayŽ, is The World's Online Marketplace for the sale of goods and services by a diverse community of individuals and businesses. (USA 2010) eBayŽ has approximately 30 main categories which are further divided into hundreds of sub-categories with each hosting thousands of auctions daily. The Real Estate category is divided into five sub-categories: Commercial, Land, Residential, Timeshares, and Other. This project will focus on the eBayŽ Land auctions. There are nearly 1000 auctions occurring daily and an additional 80 - 100 new items listed each day. Who is selling? Where are they selling? How many dollars are involved? This project will develop a method to acquire the relative data from the thousands of web pages that describe these auctions and spatially illustrate the results. The process will continue down into the county level in order to determine which counties are the most prevalent for the two states having the highest number of auctions. The goal is to develop a method that aids in the collection, organization, manipulation and analysis of the raw data from the eBayŽ internet site. The project will involve the development of a software application written in Visual FoxPro that will interface with the eBayŽ internet pages. ArcMap8.3 will be used to spatially display the results. This emphasis of this project is not on the results of the eBayŽ land auctions, but is about the process and development of the application that acquired the data. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Background Almost everyone has at one time or another looked at the eBayŽ website. There is a large amount of information available for viewing that would be very time consuming to manually organize in order to study a particular item or category. eBayŽ is aware of the vast amount of information available and the usefulness to others in either researching items in hopes of joining the millions of eBayŽ sellers or to a very conservative buyer who wants to pay a fair price for an item, but they closely guard the information. Not many public or private entities offer access to their databases Many websites have pages of pertinent information displayed but additional analysis or different presentation of the data is necessary to meet other requirements of a research project. Some organizations have a significant amount of relative data for a specific research project, but the organization of the website pages and the need to load and read and each page is time consuming. Depending on the depth and scope of the project, gathering the data using pen and paper can almost be prohibitive. Can you ever have to much data? Only if the data is unorganized or irretrievable. This is when the researcher becomes overwhelmed and buried in piles of paper. I was not going to spend a couple of months printing out pages for the eBayŽ land auctions and then manually enter information into a spreadsheet. I was not able to find a software application that can mole through web pages extracting data and populating fields into a database. So, I created one that could.. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

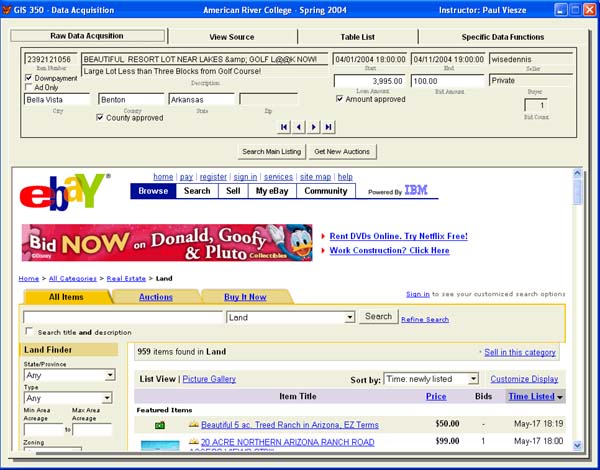

Methods The process began with a close study of the eBayŽ land auction pages. There were two different types of pages that needed to be reviewed: 1) The main listing page that provides a list of all current auctions and 2) the auction item page that provides the specific auction item information. From this review, a database was developed that would serve as the collection center for the data elements. The auction item page was the page with the specific information and lead to the identification of several data elements: STRUCTURE FOR AUCTION ITEM TABLE

A graphical user interface was developed using Visual FoxPro. The designed used object oriented programming and was divided in four page frames to allow the greatest access for data manipulation.

The main listing pages were used to extract the auction item identification number for each active auction. The auction identification numbers are not visible in the main listing screen. These numbers have to be extracted from the html source of the main listing page. The initial program function of acquiring each active auction item number had to determine the total number of main listing pages and cycle through each one. A temporary table was used to collect these numbers each time the program was run. Each number was checked against an array containing all auction item numbers from the auction item table. Those numbers that were found to be in both tables were ignored while numbers not found in the auction item table were then appended to the auction item table. The next function required the application to acquire the beginning html source for the auction items. Once the beginning source was captured, executing the additional functions extracted the beginning dates and ending dates. These dates needed to be modified to a datetime type field for comparison with the computer date. This was necessary in order to search the eBayŽ database for the inactive auctions and extract the html source for these auctions. Once the ending html source code was acquired, the process of extracting the remaining data elements began. The majority of the data elements were located in a consistent manner. Below is a snippet of an html source and the associated code necessary to extract the "Winning Bid Amount" data element.

Additional data elements were collected in a similar manner, but may have required significant If-Then statements depending on the complexity of the data element and the options used by eBayŽ to display the data. The data collection process began February 10, 2004 and continued through April 18, 2004. There were 7430 records added to the auction item table. A criterion was set for the acceptable dataset to be used for the analysis. This criterion were:

After the criterion were applied, the remaining dataset contained 3686 records. An analysis on these records was conducted to determine the state from which these properties were located. Once the data was assembled, the process of determining which states had the highest number of auctions was pretty straight forward, but not very challenging and rewarding. I decided to take a look at the auctions within each state and determine where in the state the auctions were occurring. The two states having the highest number of auctions were further analyzed to determine the counties within the state. In addition, the dollar value for these auctions in the two highest states was also determine. The top sellers were determined from the entire dataset. These analysis resulted in additional tables in the application. These state count table and the county count table were then joined to the state and county layers within ArcMap. The top sellers for the entire dataset were presented in a tabular format. The dollar value for the auctions occurring in the two states having the most auctions was presented on the state county map. |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Results

An analysis of the data revealed that Arkansas had the

most land auction sales during the reporting period followed by the

Texas. This information is presented spatially using the following

three figures. eBayŽ Land Auctions

February 15, 2004 to

April 15, 2004

Arkansas Land Auctions By

County

A study of the entire dataset determined the top three

sellers further broken down to the state level as presented below:

TOP THREE SELLERS FOR THE UNITED STATES

nationallandcompany - 245

governmentauction - 181

tmas1- 143

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Analysis

After the count was in for the total numbers of auctions occurring within a state, I felt a little empty. The process worked, but one map of the United States was not very rewarding and I felt it really didn't demonstrate the full potential utilizing this type of process of data acquisition.. I decided to go deeper into the data, down to the county level. This is where the challenge and a very time consuming process emerged. None of the html source pages had a standard for mining out the county name. I decided to write a small routine that would use the ESRI county table and search the html source for county names occurring within the state identified. The process would look for a match in an similar manner used for the other data fields. The problem was that the list was in an alphabetical order and the first match moved the search to the next record. For those states having a lake referenced in the html source as well as a Lake county within the state produced unreliable data. Other problems occurred when the html source was describing landmarks and points of interest that also corresponded with county names. The only way to be sure that there was some integrity to the data was to review each html source for the correct county name. If no county name was in the html source, I needed to located the city and then find the county it was in. I searched the census website but was unable to located any table that listed states, counties and cities. I used three other web sites extensively for this process: www.city-data.com, www.google.com, http://txdot.lib.utexas.edu/. When a location was acceptable and a group of records were identified, I was able to populate the county in batches. In order to get the correct dollar amount , I found it necessary to determine what auctions were only bids on a down payment and search out the assumed load amount. This was a more tedious task than determining the county name. Again, there were no standard search phrases for an assumed loan. Each html source was viewed individually and the data was manually entered into the appropriate field. To keep track of which items had been processed, I modified the original table structure to include the county and amount approval fields. These two fields allowed me to manage the data through this process and served as the indicator that all records were processed. Because of the extensive amount of time involved in determining the proper county and dollar value for each item, I limited the process to the two states having the highest number of auctions. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Conclusions

After my experience with the county name and dollar value, I would recommend that a small subset of the data be used to develop the entire process from cradle to grave. This would identify any problems that would have to be addressed before the final analysis could occur. If I had done so myself, I would have been aware of the county and dollar data element collection issue sooner than two weeks before the due date. As far as the county data collection, I would have had the time to develop a similar application that could have gone through the www.city-data.com web pages and created a table that would have attributes of states, counties and cities as well as all the other data I could extract from these pages. An awareness of the data collection issue of the dollar value data element would have motivated me to process the data as it was collected and not find myself, as I did with the county name, up against the dead line. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

References

ESRI State and County Shape Files

www.city-data.com | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||